Paper Accepted to SBRC 2024!

Our paper entitled “Residual-based Adaptive Huber Loss (RAHL) - Design of an Improved Huber Loss for CQI Prediction in 5G Networks” was just accepted for publication in the Brazilian Symposium on Computer Networks and Distributed Systems.

The deployment of Fifth Generation Networks (5G) represents a significant leap forward in telecommunications. To ensure optimal communication, radio signal quality indicators are indispensable in managing 5G links. User Equipment (UE) collects these indicators and communicates them to the evolved Node B (eNB), which serves as the base station. The eNB’s radio network controller adjusts channel modulation based on this information to enhance communication links for UEs.

However, the reactive nature of this process poses challenges, as the collected indicators reflect events in the recent past, and relying solely on reactive operations may not suffice for optimal performance, especially with 5G links characterized by short-range, high-frequency radio signals and mobile UEs. To overcome this limitation and foster more proactive network management, there is a growing need for predictive analytics and machine learning algorithms. These technologies can analyze historical data, identify patterns, and forecast potential issues or changes in the network. Leveraging predictive insights empowers network operators to take proactive measures, optimizing channel modulations, resource allocation, and overall network performance (Parera et al., 2019, Sakib et al., 2021, Yin et al., 2020, Silva et al., 2021).

In the design of the communication system, the Channel Quality Indicator (CQI) is a crucial parameter, as it encodes the state of the channel, allowing base stations to adjust service quality based on real-time channel conditions (Yin et al., 2020). However, accurately forecasting CQI proves challenging due to its dynamic nature, ranging from 0 to 15 and influenced by various environmental factors. Incorrect predictions can significantly degrade the 5G channel’s quality, impacting modulation and resource allocation by the base station, which relies on reported CQI to optimize bandwidth usage. This can lead to decreased Quality of Experience (QoE) for users, affecting application data rates and wasting network resources.

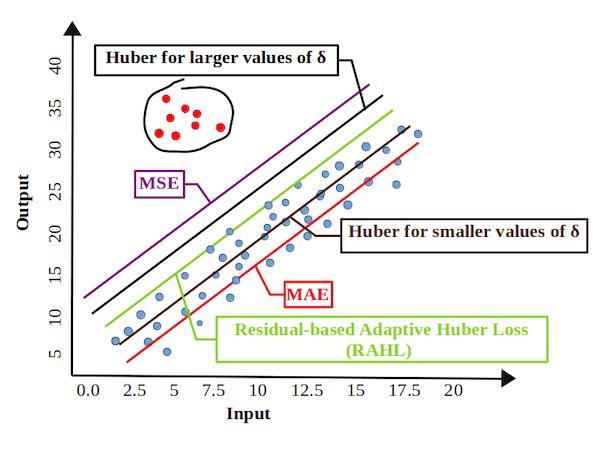

Machine learning models, though powerful, face difficulties in accurately predicting CQI due to abrupt shifts and fluctuations, potentially leading to suboptimal performance. As a result, alternative methodologies are necessary. Determining the appropriate error metric for evaluating CQI signal quality, whether Mean Squared Error (MSE) or Mean Absolute Error (MAE), is complicated by the limitations of both metrics and the specific conditions affecting CQI accuracy within the 5G ecosystem.

To address this, we explore the Huber loss function (Gökcesu and Gökcesu, 2021), known for its robust, piece-wise structure that mitigates the influence of outliers compared to MSE (Raca et al., 2020). Huber loss function seamlessly blends the quadratic (MSE) and absolute value (MAE) losses, offering a user-controlled trade-off via a hyperparameter called delta. However, manually setting this hyperparameter to balance sensitivity to small errors (MAE) and robustness to outliers (MSE) can be challenging. Motivated by this, instead of manually setting this hyperparameter, which is hard, we propose the Residual-based Adaptive Huber Loss (RAHL), which transforms the hyperparameter delta into a trainable parameter. By transforming delta into a trainable parameter, RAHL empowers models to learn optimal outlier robustness during training, achieving a sweet spot between outlier resistance and inlier accuracy (Gökcesu and Gökcesu, 2021).

Are you looking for a robust loss function for forecasting signal quality indicators in 5G networks? Check our paper!