Paper Accepted to SIBGRAPI 2023!

Our paper entitled “Tightening Classification Boundaries in Open Set Domain Adaptation through Unknown Exploitation” was just accepted for publication in the SIBGRAPI - Conference on Graphics, Patterns and Images.

In the last few years, Deep Learning (DL) methods have brought revolutionary results to several research areas, mainly because of the Convolutional Neural Networks (CNNs) in computer-vision-related problems (Geng et al., 2021). In general, those methods are expected to work under unrealistic assumptions, such as a controlled environment with a totally labeled set of data and a Closed Set (CS) of categories (Geng et al., 2021). However, this assumption does not usually hold in uncontrollable environments, like in real-world problems.

In uncontrollable environments, we can be faced with two main problems: the decreased level of supervision, and no control about the incoming data. For the first problem, it is usual to use a completely annotated dataset (i.e., source domain), which should be similar to the unlabeled target dataset (i.e., target domain), to train the model. However, since the data may come from different underlying distributions, it can induce the domain-shift problem (Silva et al., 2021). For the second problem, our training and test data can present some level of category-shift since we do not know the data beforehand, requiring the model to handle examples from possible unknown/unseen categories during inference (Chen et al., 2022). Recently, Busto and Gall, 2017, were the pioneers to describe the Open Set Domain Adaptation (OSDA), the more realistic and challenging scenario where both problems occur at the same time.

Most OSDA methods rely on rejecting unknown samples in target domain and aligning known samples in the target domain with the source domain. For this, they draw known and unknown sets of the high-confidence samples from the target domain. To alleviate the domain shift, positive samples from the known set are aligned with the source domain (Bucci et al., 2020, Rakshit et al., 2020, Bucci et al., 2022). On the other hand, negative samples from the unknown set are usually underexploited during the training, being often assigned to an additional logit of the classifier representing the unknown category, which is learned with supervision along with known classes (Liu et al., 2022).

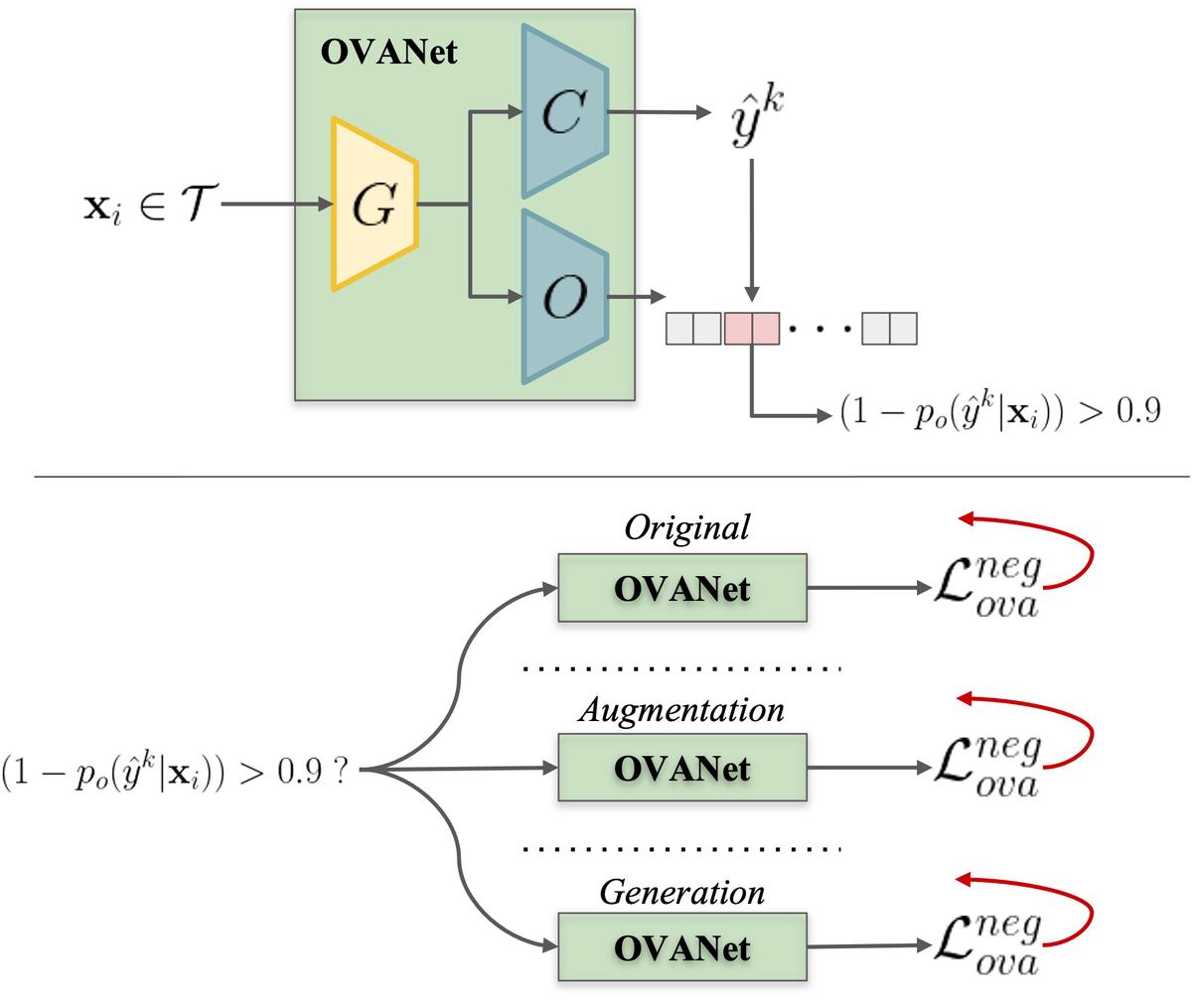

Following recent work based on unknown exploitation (Liu et al., 2022, Baktashmotlagh et al., 2022) and closed-set relationship with open-set (Vaze et al., 2022), we hypothesize that using the unknown set of the target domain to tighten the boundaries of the closed-set classifier can further improve the classification performance. Thus, we investigate this assumption using the OVANet (Saito and Saenko, 2021) approach, a UNiversal Domain Adaptation (UNDA) method, that we extended in a three-way manner in our evaluations for the OSDA setting.

We first extract high-confidence negatives from the target domain based on a higher confidence threshold (Rakshit et al., 2020) in order to (1) evaluate the direct use of pure negatives as a new constraint for the known classification (original approach); (2) use data augmentation to randomly transform negatives before applying them as the classification constraint (augmentation approach); and (3) create negative/adversarial examples by a Generative Adversarial Network (GAN) model trained with such negatives, whose objective is to deceive the OVANet by posing synthetic instances as positive samples that it must later learn to reject (generation approach).

Do you want to know more about using unknown samples to tighten the classification boundaries? Check our paper!